Probability basics for Duckietown

Contents

What you will need

Foundations of mathematics

What you will get

Foundations of probability theory

Probability basics for Duckietown#

In this chapter we give a brief review of some basic probabilistic concepts.

For a more in-depth treatment of the subject we refer the interested reader to a textbook such as [PP02].

Random Variables#

The key underlying concept in probabilistic theory is that of an event, which is the output of a random trial. Examples of an event include the result of a coin flip turning up HEADS or the result of rolling a die turning up the number “4”.

Definition: Random Variable

A (either discrete or continuous) variable that can take on any value that corresponds to the feasible output of a random trial.

For example, we could model the event of flipping a fair coin with the random variable \(X\). We write the probability that \(X\) takes \(\heads\) as \(p(X=\heads)\). The set of all possible values for the variable \(X\) is its domain, \(\aset{X}\).

In this case,

Since \(X\) can only take one of two values, it is a binary random variable. In the case of a die roll,

and we refer to this as a discrete random variable. If the output is real value or a subset of the real numbers, e.g., \(\aset{X} = \mathbb{R}\), then we refer to \(X\) as a continuous random variable.

Consider once again the coin tossing event. If the coin is fair, we have

Here, the function \(p(x)\) is called the probability mass function or PMF. The PMF is shown in The Probability Mass Function (PMF) for a fair coin toss.

Fig. 230 The Probability Mass Function (PMF) for a fair coin toss#

Here are some very important properties of \(p(x)\):

\(0\leq p(x) \leq (1)\)

\(\sum_{x\in\aset{X}}=1\)

In the case of a continuous random variable, we will call this function \(f(x)\) and call it a probability density function, or PDF.

In the case of continuous RVs, technically the \(p(X=x)\) for any value \(x\) is zero since \(\aset{X}\) is infinite. To deal with this, we also define another important function, the cumulative density function, which is given by \(F(x) \triangleq p(X\leq x)\), and now we can define \(f(x) \triangleq \frac{d}{dx}F(x)\).

A PDF and corresponding CDF are shown in The continuous probability and cumulative density functions (PDF and CDF, respectively). This happens to be a Gaussian distribution, defined more precisely in .

Fig. 231 The continuous probability and cumulative density functions (PDF and CDF, respectively)#

Joint Probabilities#

If we have two different RVs representing two different events \(X\) and \(Y\), then we represent the probability of two distinct events \(x \in \aset{X}\) and \(y \in \mathcal{Y}\) both happening, which we will denote as follows:

The function \(p(x,y)\) is called joint distribution.

Conditional Probabilities#

Again, considering that we have to RVs, \(X\) and \(Y\), imagine these two events are linked in some way. For example, \(X\) is the numerical output of a die roll and \(Y\) is the binary even-odd output of the same die roll. Clearly these two events are linked since they are both uniquely determined by the same underlying event (the rolling of the die). In this case, we say that the RVs are dependent on one another.

In the event that we know one of events, this gives us some information about the other. We denote this using the following conditional distribution

If you think this is very easy that’s good, but don’t get over-confident.

The joint and conditional distributions are related by the following (which could be considered a definition of the joint distribution):

and, similarly, the following could be considered a definition of the conditional distribution:

In other words, the conditional and joint distributions are inextricably linked: you can’t really talk about one without the other.

If two variables are independent, then the following relation holds: \(p(x,y)=p(x)p(y)\).

Bayes’ Rule#

Upon closer inspection of \eqref{eq:joint}, we can see that the choice of which variable to condition upon is completely arbitrary. We can write:

and then after rearranging things we arrive at one of the most important formulas for mobile robotics, Bayes’ rule:

Exactly why this formula is so important might (at some point) be covered in more detail in later sections, but we will give an initial intuition here.

Consider that the variable \(X\) represents something that we are trying to estimate but cannot observe directly, and that the variable \(Y\) represents a physical measurement that relates to \(X\). We want to estimate the distribution over \(X\) given the measurement \(Y\), \(p(x|y)\), which is called the posterior distribution. Bayes’ rule lets us to do this.

For every possible state, you take the probability that this measurement could have been generated, \(p(y|x)\), which is called the measurement likelihood, you multiply it by the probability of that state being the true state, \(p(x)\), which is called the prior, and you normalize over the probability of obtaining that measurement from any state, \(p(y)\), which is called the evidence.

Answer: \(\approx\) 33.2%.

This answer should surprise you. It highlights the power of the prior.

Marginal Distribution#

If we already have a joint distribution \(p(x,y)\) and we wish to recover the single variable distribution \(p(x)\), we must marginalize over the variable \(Y\). The involves summing (for discrete RVs) or integrating (for continuous RVs) over all values of the variable we wish to marginalize:

This can be thought of as projecting a higher dimensional distribution onto a lower dimensional subspace. For example, consider A 2D joint data and 2 marginal 1D histogram plots, which shows some data plotted on a 2D scatter plot, and then the marginal histogram plots along each dimension of the data.

Fig. 232 A 2D joint data and 2 marginal 1D histogram plots#

Marginalization is an important operation since it allows us to reduce the size of our state space in a principled way.

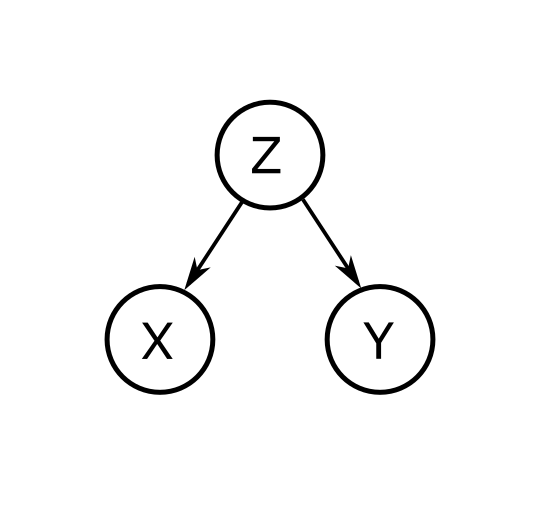

Conditional Independence#

If two RVs, \(X\) and \(Y\) are correlated, we may be able to encapsulate the dependence through a third random variable \(Z\). Therefore, if we know \(Z\), we may consider \(X\) and \(Y\) non correlated to each other.

Fig. 233 A graphical representation of the conditional independence of \(X\) and \(Y\) given \(Z\)#

Note

Graphical models deserve an independent discussion. Doing a good job of sufficiently describing graphical models and the dependency relations that they express requires careful thought. Until the editors of this manual get it it, we refer curious readers to e.g.: Koller and Friedman.

Moments#

The \(n\)th moment of an RV, \(X\), is given by \(E[X^n]\) where \(E[]\) is the expection operator with:

in the discrete case, and

in the continuous case.

The 1st moment is the mean, \(\mu_X=E[X]\).

The \(n\)-th central moment of an RV, \(X\) is given by \(E[(X-\mu_X)^n]\).

The second central moment is called the covariance, \(\sigma^2_X=E[(X-\mu_X)^2]\).

Entropy#

Definition: Entropy

The entropy of an RV is a scalar measure of the uncertainty about the value the RV.

A common measure of entropy is the Shannon entropy, whose value is given by

This measure originates from communication theory and literally represents how many bits are required to transmit a distribution through a communication channel. For many more details related to information theory, we recommend [Mac02].

As an example, we can easily write out the Shannon entropy associated with a binary RV (e.g. flipping a coin) as a function of the probability that the coin turns up heads (call this \(p\)):

Fig. 234 The Shannon entropy of a binary RV \(X\).#

Notice that our highest entropy (uncertainty) about the outcome of the coin flip is when it is a fair coin (equal probability of heads and tails). The entropy decays to 0 as we approach \(p=0\) and \(p=1\) since in these two cases we have no uncertainty about the outcome of the flip. It should also be clear why the function is symmetrical around the \(p=0.5\) value.

The Gaussian Distribution#

In mobile robotics we use the Gaussian, or normal, distribution a lot.

The 1-D Gaussian distribution pdf is given by:

where \(\mu\) is called the mean of the distribution, and \(\sigma\) is called the standard deviation. A plot of the 1D Gaussian was previously shown in The continuous probability and cumulative density functions (PDF and CDF, respectively).

We will rarely deal with the univariate case and much more often deal with the multi-variate Gaussian:

The value from the exponent: \((\state-\mathbf{\mu})^T\mathbf{\Sigma}^{-1}(\state - \mathbf{\mu})\) is sometimes written \(||\state - \mathbf{\mu}||_\mathbf{\Sigma}\) and is referred to as the Mahalanobis distance or energy norm.

Mathematically, the Gaussian distribution has some nice properties as we will see. But is this the only reason to use this as a distribution. In other words, is the assumption of Gaussianicity a good one?

There are two very good reasons to think that the Gaussian distribution is the “right” one to use in a given situation.

The central limit theorem says that, in the limit, if we sum an increasing number of independent random variables, the distribution approaches Gaussian

It can be proven [editor’s note: add reference] that the Gaussian distribution has the maximum entropy subject to a given value for the first and second moments. In other words, for a given mean and variance, it makes the least assumptions about the other moments.

Suggested exercise: derive the formula for Gaussian entropy.

Banana distributions?#

Comment from AC

The Banana distribution is the official distribution in robotics!

Counter-comment from LP